🔷 실습2

- Agent가 Target을 향해 이동

- position, rigidbody 값 관측

- 연속 : actions.ContinuousActions

🔶 기본 세팅

- 폴더 정리

https://assetstore.unity.com/packages/3d/characters/free-mummy-monster-134212

Free Mummy Monster | 3D 캐릭터 | Unity Asset Store

Elevate your workflow with the Free Mummy Monster asset from amusedART. Find this & other 캐릭터 options on the Unity Asset Store.

assetstore.unity.com

- 패키지 다운로드 후 임포트

🔶 Agent 세팅

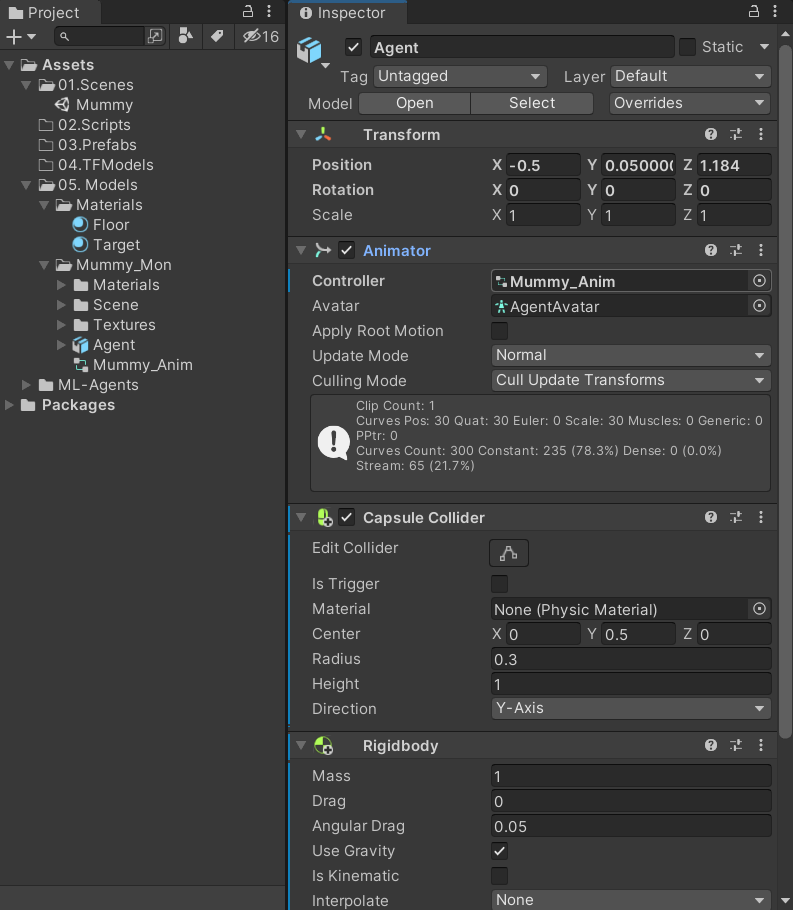

- Mummy_Mon프리팹을 Agent로 이름 변경

- 머티리얼 연결 > Apply

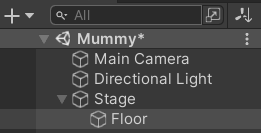

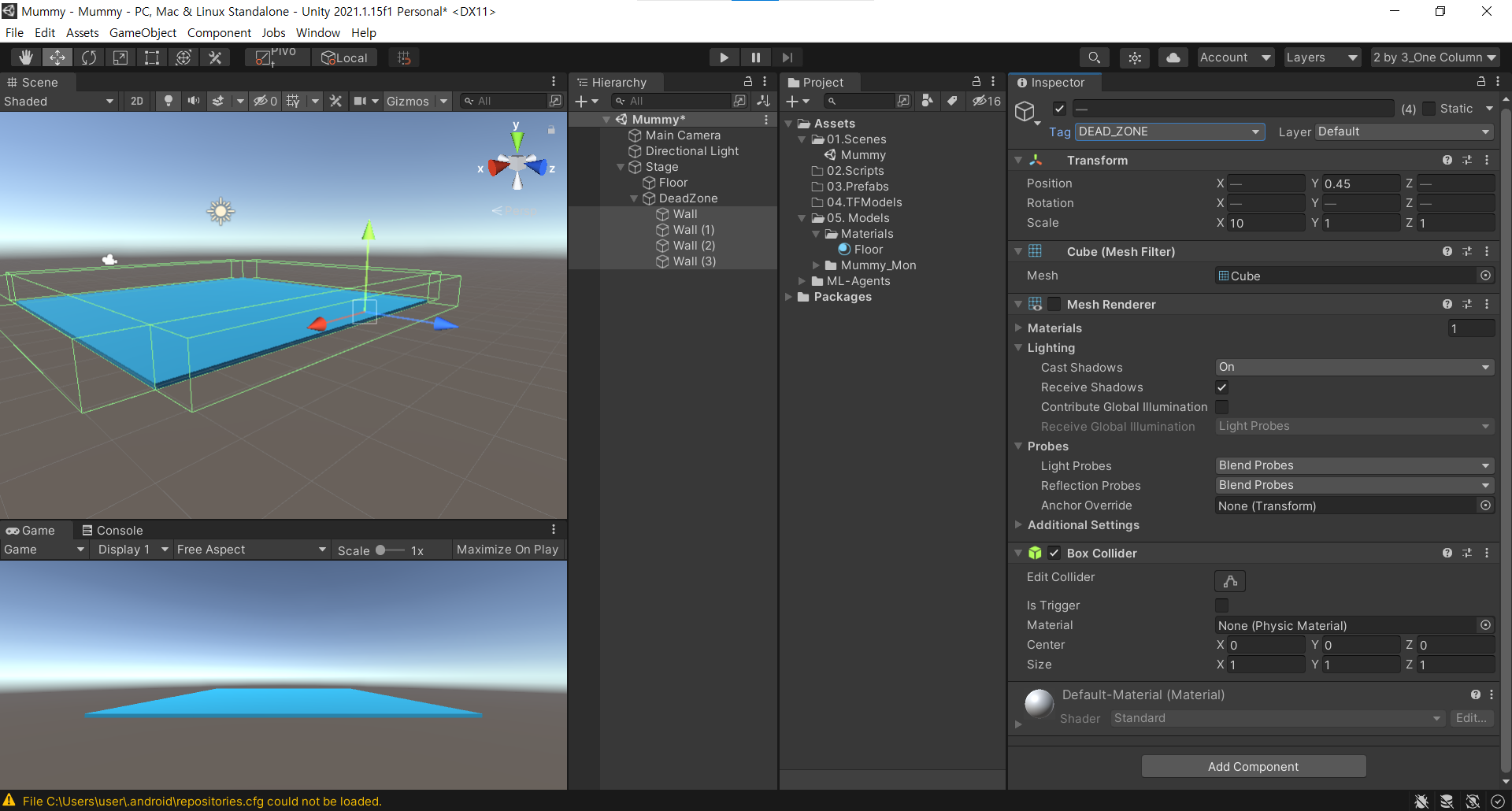

- 빈게임오브젝트(Stage)

- 큐브(Floor)

- 빈게임오브젝트(DeadZone)

- 큐브(Wall) 생성

- Mesh Renderer 비활성화

- Tag(DEAD_ZONE) 생성 > 적용

- 큐브(Target) 생성

- Tag(TARGET) 생성 > 적용

- Agent 추가

- Capsule Collider 추가 > 조정

- Rigidbody 추가 > Freeze Rotation

- Animator Controller 연결

🔶 Script 작성 : ML-Agent 기본 함수

- MummyAgent.cs 생성

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using Unity.MLAgents; // ML_Agents 네임스페이스

/*

에이전트의 역할

1. 주변환경을 관측(Observations)

2. 정책에 의해 행동(Action)

3. 보상(Reward)

*/

public class MummyAgent : Agent

{

}

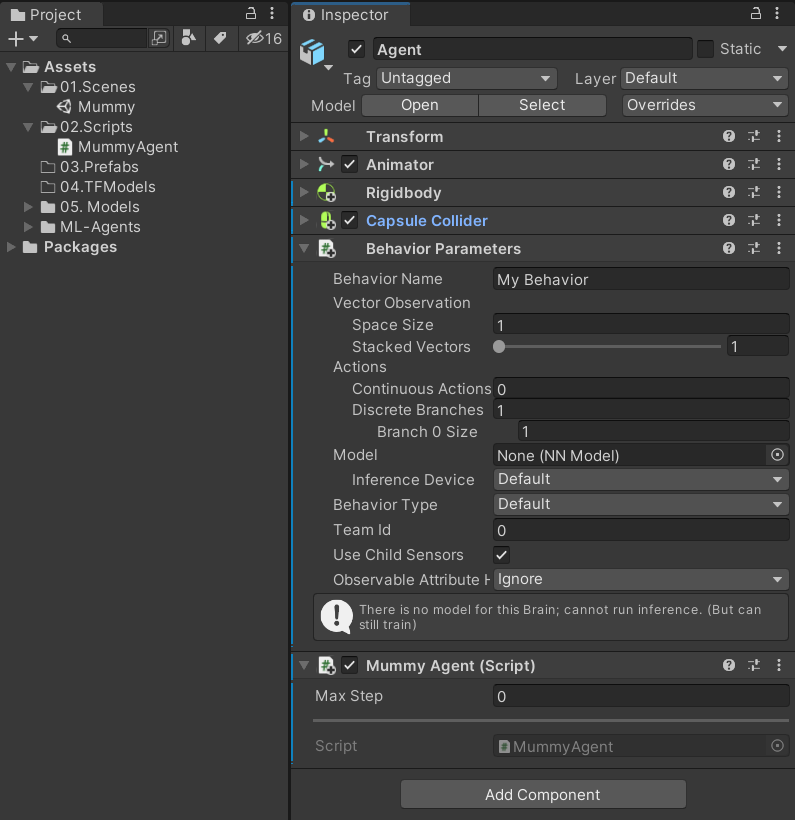

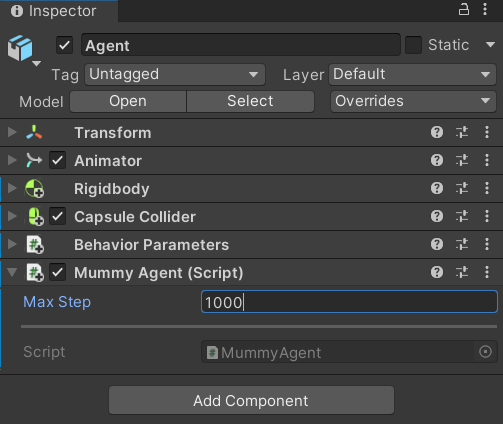

- Agent오브젝트에 MummyAgent.cs 연결

- Max Step : 해당 값만큼 움직였으나 보상이 없을 때 처음부터 다시 시작함

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using Unity.MLAgents; // ML_Agents 네임스페이스

using Unity.MLAgents.Sensors;

using Unity.MLAgents.Actuators;

/*

에이전트의 역할

1. 주변환경을 관측(Observations)

2. 정책에 의해 행동(Action)

3. 보상(Reward)

*/

public class MummyAgent : Agent

{

// 초기화 메소드

public override void Initialize()

{

}

// 에피소드(학습의 단위)가 시작될 때마다 호출되는 메소드

public override void OnEpisodeBegin()

{

}

// 주변 환경을 관측하는 콜백 메소드

public override void CollectObservations(VectorSensor sensor)

{

}

// 정책으로부터 전달받은 데이터를 기반으로 행동을 실행하는 메소드

public override void OnActionReceived(ActionBuffers actions)

{

}

// 개발자의 테스트 용도 / 모방학습

public override void Heuristic(in ActionBuffers actionsOut)

{

}

}- 기본 함수들

🔶 Script 작성 :

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using Unity.MLAgents; // ML_Agents 네임스페이스

using Unity.MLAgents.Sensors;

using Unity.MLAgents.Actuators;

/*

에이전트의 역할

1. 주변환경을 관측(Observations)

2. 정책에 의해 행동(Action)

3. 보상(Reward)

*/

public class MummyAgent : Agent

{

private new Rigidbody rigidbody;

private new Transform transform;

private Transform targetTr;

// 초기화 메소드

public override void Initialize()

{

rigidbody = GetComponent<Rigidbody>();

transform = GetComponent<Transform>();

targetTr = transform.parent.Find("Target");

}

// 에피소드(학습의 단위)가 시작될 때마다 호출되는 메소드

public override void OnEpisodeBegin()

{

// 물리력을 초기화

rigidbody.velocity = Vector3.zero;

rigidbody.angularVelocity = Vector3.zero;

// 에이전트의 위치를 불규칙하게 변경

transform.localPosition = new Vector3(Random.Range(-4.0f, 4.0f), 0.05f, Random.Range(-4.0f, 4.0f));

// 타겟의 위치를 불규칙하게 변경

targetTr.localPosition = new Vector3(Random.Range(-4.0f, 4.0f), 0.55f, Random.Range(-4.0f, 4.0f));

}

// 주변 환경을 관측하는 콜백 메소드

public override void CollectObservations(VectorSensor sensor)

{

// 총 8개의 데이터를 관측

sensor.AddObservation(targetTr.localPosition); // (x,y,z) 3개의 데이터

sensor.AddObservation(transform.localPosition); // (x,y,z) 3개의 데이터

sensor.AddObservation(rigidbody.velocity.x); // (x) 1개의 데이터

sensor.AddObservation(rigidbody.velocity.z); // (z) 1개의 데이터

}

// 정책으로부터 전달받은 데이터를 기반으로 행동을 실행하는 메소드

public override void OnActionReceived(ActionBuffers actions)

{

}

// 개발자의 테스트 용도 / 모방학습

public override void Heuristic(in ActionBuffers actionsOut)

{

}

}- 관측하는 데이터 개수를 정확하게 알아야함

- Behavior Name 명시

- Space Size : 관측하는 값 개수

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using Unity.MLAgents; // ML_Agents 네임스페이스

using Unity.MLAgents.Sensors;

using Unity.MLAgents.Actuators;

/*

에이전트의 역할

1. 주변환경을 관측(Observations)

2. 정책에 의해 행동(Action)

3. 보상(Reward)

*/

public class MummyAgent : Agent

{

private new Rigidbody rigidbody;

private new Transform transform;

private Transform targetTr;

// 초기화 메소드

public override void Initialize()

{

rigidbody = GetComponent<Rigidbody>();

transform = GetComponent<Transform>();

targetTr = transform.parent.Find("Target");

}

// 에피소드(학습의 단위)가 시작될 때마다 호출되는 메소드

public override void OnEpisodeBegin()

{

// 물리력을 초기화

rigidbody.velocity = Vector3.zero;

rigidbody.angularVelocity = Vector3.zero;

// 에이전트의 위치를 불규칙하게 변경

transform.localPosition = new Vector3(Random.Range(-4.0f, 4.0f), 0.05f, Random.Range(-4.0f, 4.0f));

// 타겟의 위치를 불규칙하게 변경

targetTr.localPosition = new Vector3(Random.Range(-4.0f, 4.0f), 0.55f, Random.Range(-4.0f, 4.0f));

}

// 주변 환경을 관측하는 콜백 메소드

public override void CollectObservations(VectorSensor sensor)

{

// 총 8개의 데이터를 관측

sensor.AddObservation(targetTr.localPosition); // (x,y,z) 3개의 데이터

sensor.AddObservation(transform.localPosition); // (x,y,z) 3개의 데이터

sensor.AddObservation(rigidbody.velocity.x); // (x) 1개의 데이터

sensor.AddObservation(rigidbody.velocity.z); // (z) 1개의 데이터

}

// 정책으로부터 전달받은 데이터를 기반으로 행동을 실행하는 메소드

public override void OnActionReceived(ActionBuffers actions)

{

// 연속 : actions.ContinuousActions

// 이산 : actions.DiscreteActions

var action = actions.ContinuousActions;

// [0] Up, Down

// [1] Left, Right

Vector3 dir = (Vector3.forward * action[0]) + (Vector3.right * action[1]);

rigidbody.AddForce(dir.normalized * 50.0f);

// 가만히 있을 때 마이너스 페널티 부여

SetReward(-0.001f);

}

// 개발자의 테스트 용도 / 모방학습

public override void Heuristic(in ActionBuffers actionsOut)

{

}

}

- 연속적인 값 2개 : Up,Down / Left, Right

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using Unity.MLAgents; // ML_Agents 네임스페이스

using Unity.MLAgents.Sensors;

using Unity.MLAgents.Actuators;

/*

에이전트의 역할

1. 주변환경을 관측(Observations)

2. 정책에 의해 행동(Action)

3. 보상(Reward)

*/

public class MummyAgent : Agent

{

private new Rigidbody rigidbody;

private new Transform transform;

private Transform targetTr;

// 초기화 메소드

public override void Initialize()

{

rigidbody = GetComponent<Rigidbody>();

transform = GetComponent<Transform>();

targetTr = transform.parent.Find("Target");

}

// 에피소드(학습의 단위)가 시작될 때마다 호출되는 메소드

public override void OnEpisodeBegin()

{

// 물리력을 초기화

rigidbody.velocity = Vector3.zero;

rigidbody.angularVelocity = Vector3.zero;

// 에이전트의 위치를 불규칙하게 변경

transform.localPosition = new Vector3(Random.Range(-4.0f, 4.0f), 0.05f, Random.Range(-4.0f, 4.0f));

// 타겟의 위치를 불규칙하게 변경

targetTr.localPosition = new Vector3(Random.Range(-4.0f, 4.0f), 0.55f, Random.Range(-4.0f, 4.0f));

}

// 주변 환경을 관측하는 콜백 메소드

public override void CollectObservations(VectorSensor sensor)

{

// 총 8개의 데이터를 관측

sensor.AddObservation(targetTr.localPosition); // (x,y,z) 3개의 데이터

sensor.AddObservation(transform.localPosition); // (x,y,z) 3개의 데이터

sensor.AddObservation(rigidbody.velocity.x); // (x) 1개의 데이터

sensor.AddObservation(rigidbody.velocity.z); // (z) 1개의 데이터

}

// 정책으로부터 전달받은 데이터를 기반으로 행동을 실행하는 메소드

public override void OnActionReceived(ActionBuffers actions)

{

// 연속 : actions.ContinuousActions

// 이산 : actions.DiscreteActions

var action = actions.ContinuousActions;

// [0] Up, Down

// [1] Left, Right

Vector3 dir = (Vector3.forward * action[0]) + (Vector3.right * action[1]);

rigidbody.AddForce(dir.normalized * 50.0f);

// 가만히 있을 때 마이너스 페널티 부여

SetReward(-0.001f);

}

// 개발자의 테스트 용도 / 모방학습

public override void Heuristic(in ActionBuffers actionsOut)

{

var action = actionsOut.ContinuousActions;

action[0] = Input.GetAxis("Vertical");

action[1] = Input.GetAxis("Horizontal");

}

}

- Decision Requester 컴포넌트 추가

// Decision Requester 컴포넌트 : 결정을 요청하는 요청자

// Decision Period : 5번에 한 번 결정을 요청함

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using Unity.MLAgents; // ML_Agents 네임스페이스

using Unity.MLAgents.Sensors;

using Unity.MLAgents.Actuators;

/*

에이전트의 역할

1. 주변환경을 관측(Observations)

2. 정책에 의해 행동(Action)

3. 보상(Reward)

*/

public class MummyAgent : Agent

{

private new Rigidbody rigidbody;

private new Transform transform;

private Transform targetTr;

public Material goodMAT;

public Material badMAT;

private Material originMAT;

private Renderer floorRd;

// 초기화 메소드

public override void Initialize()

{

rigidbody = GetComponent<Rigidbody>();

transform = GetComponent<Transform>();

targetTr = transform.parent.Find("Target");

floorRd = transform.parent.Find("Floor").GetComponent<Renderer>();

originMAT = floorRd.material;

}

// 에피소드(학습의 단위)가 시작될 때마다 호출되는 메소드

public override void OnEpisodeBegin()

{

// 물리력을 초기화

rigidbody.velocity = Vector3.zero;

rigidbody.angularVelocity = Vector3.zero;

// 에이전트의 위치를 불규칙하게 변경

transform.localPosition = new Vector3(Random.Range(-4.0f, 4.0f), 0.05f, Random.Range(-4.0f, 4.0f));

// 타겟의 위치를 불규칙하게 변경

targetTr.localPosition = new Vector3(Random.Range(-4.0f, 4.0f), 0.55f, Random.Range(-4.0f, 4.0f));

StartCoroutine(ReverMaterial());

}

IEnumerator ReverMaterial()

{

yield return new WaitForSeconds(0.2f);

floorRd.material = originMAT;

}

// 주변 환경을 관측하는 콜백 메소드

public override void CollectObservations(VectorSensor sensor)

{

// 총 8개의 데이터를 관측

sensor.AddObservation(targetTr.localPosition); // (x,y,z) 3개의 데이터

sensor.AddObservation(transform.localPosition); // (x,y,z) 3개의 데이터

sensor.AddObservation(rigidbody.velocity.x); // (x) 1개의 데이터

sensor.AddObservation(rigidbody.velocity.z); // (z) 1개의 데이터

}

// 정책으로부터 전달받은 데이터를 기반으로 행동을 실행하는 메소드

public override void OnActionReceived(ActionBuffers actions)

{

// 연속 : actions.ContinuousActions

// 이산 : actions.DiscreteActions

var action = actions.ContinuousActions;

// [0] Up, Down

// [1] Left, Right

Vector3 dir = (Vector3.forward * action[0]) + (Vector3.right * action[1]);

rigidbody.AddForce(dir.normalized * 50.0f);

// 가만히 있을 때 마이너스 페널티 부여

SetReward(-0.001f);

}

// 개발자의 테스트 용도 / 모방학습

public override void Heuristic(in ActionBuffers actionsOut)

{

var action = actionsOut.ContinuousActions;

action[0] = Input.GetAxis("Vertical");

action[1] = Input.GetAxis("Horizontal");

}

// 보상 처리 로직

void OnCollisionEnter(Collision coll)

{

if (coll.collider.CompareTag("DEAD_ZONE"))

{

floorRd.material = badMAT;

SetReward(-1.0f);

EndEpisode(); // 학습 종료

}

if (coll.collider.CompareTag("TARGET"))

{

floorRd.material = goodMAT;

SetReward(1.0f);

EndEpisode();

}

}

}- 리워드 추가

- 트레이닝이 잘 되고 있는지 시각화 : 충돌 시 floor 색상 변화

- Stage 프리팹화

- 스테이지 복사

🔶 트레이닝 시키기

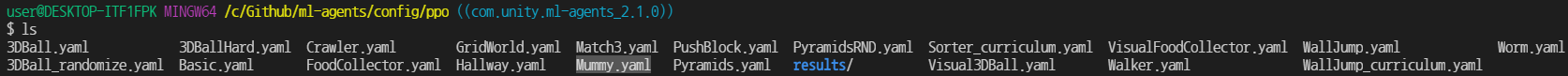

- 3DBall.yaml파일을 복사 > 이름을 Mummy.yaml로

- Mummy.yaml 파일이 생성됐음

- Mummy.yaml파일 open

behaviors:

Mummy: #Unity Behavior Parameters 컴포넌트의 Behavior 이름과 동일해야함

trainer_type: ppo

hyperparameters:

batch_size: 64

buffer_size: 12000

learning_rate: 0.0003

beta: 0.001

epsilon: 0.2

lambd: 0.99

num_epoch: 3

learning_rate_schedule: linear

network_settings:

normalize: true

hidden_units: 128

num_layers: 2

vis_encode_type: simple

reward_signals:

extrinsic:

gamma: 0.99

strength: 1.0

keep_checkpoints: 5

max_steps: 300000 #300000번 실행 후 학습 종료

time_horizon: 1000

summary_freq: 10000 #10000번에 한번씩 summary- Mummy.yaml

- behavior 이름 주의하기

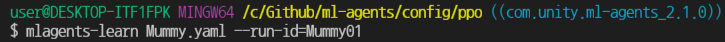

- Mummy.yaml 파일이 있는 ppo폴더 안에서 위의 코드를 실행

- 유니티에서도 실행

- 30000번 돌면 학습 종료됨

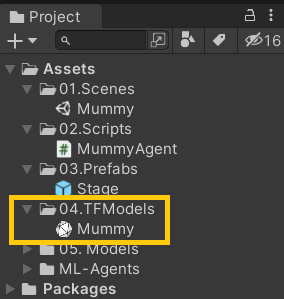

🔶 학습시킨 모델 적용시키기

- 학습한 모델(.onnx)을 프로젝트 창에 끌어다 놓기

- Agent의 model 파라미터에 학습한 모델(.onnx) 끌어다놓기

- apply to prefab

- play해 보면 잘 학습된 결과를 볼 수 있음

'🎮unity > ML-Agents' 카테고리의 다른 글

| 06. ML-Agents - Soccer (1) (1) | 2021.08.02 |

|---|---|

| 05. ML-Agents - Imitation Learning (0) | 2021.08.02 |

| 04. ML-Agents - Camera Sensor (0) | 2021.08.01 |

| 03. ML-Agents - Ray Perception Sensor 3D (0) | 2021.07.30 |

| 01. ML-Agents - 설치 및 간단한 실습 (1) | 2021.07.30 |